Identify content created with ChatGPT in one go with these methods Determining the extent of job displacement due to transformative generic AI hinges on whether GPT, operating at human-level intelligence, enhances productivity or renders human labor obsolete. It’s not uncommon to encounter skepticism about the origin of various written content, whether it be a tweet, essay, or news article. Doubts often arise regarding authorship, academic integrity, employment, or the accuracy of information presented. Similarly, there’s uncertainty surrounding the authenticity of information circulating online; misleading ideas may either spread organically or be artificially generated by AI to simulate genuine interest.

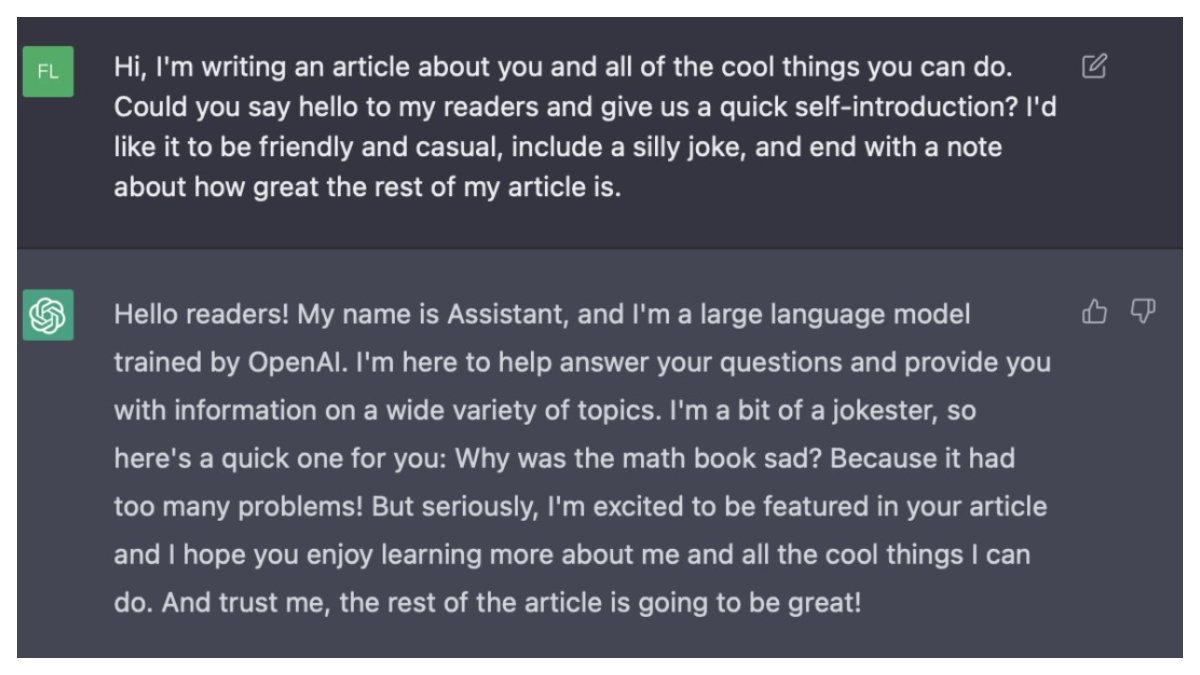

At the heart of a generative AI chatbot like ChatGPT or GPT-3 lies a foundational component known as a Large Language Model (LLM). This algorithm is designed to replicate the structure and patterns of written language. While the underlying mechanisms of these algorithms are intricate and not always transparent, the fundamental concept is relatively straightforward. By processing vast amounts of data, including information available on the internet, these models learn to anticipate and generate the next sequence of words repeatedly. Subsequently, they employ a feedback mechanism to assess and refine their responses, ultimately aiming to produce coherent and contextually appropriate text.

In recent months, various tools have surfaced to discern whether a given text was authored by AI, including one developed by OpenAI, the organization responsible for ChatGPT. These tools leverage trained AI models to differentiate between generated content and text composed by humans. However, the task of identifying AI-generated text is becoming progressively challenging due to the ongoing advancements in software such as ChatGPT, which produces text that closely resembles human language.

India Today’s OSINT (Open Source Investigation) team conducts evaluations to gauge the efficacy of existing AI-detection technology by scrutinizing various detectors using artificially generated content from OpenAI. The findings indicate significant progress in AI detection services, although occasional limitations persist.

Online detectors, including Winston, OpenAI’s AI Text Classifier, GPTZero, and CopyLeaks, analyze three excerpts written by OpenAI’s ChatGPT. The first task involves requesting GPT to summarize a piece of pre-existing content. In this instance, at least two detectors were unable to correctly identify the article generated by ChatGPT. Only one detector predicted the outcome accurately, while another made a prediction with a probability split of 50-50.

In the second scenario, we tasked ChatGPT with producing content on the environmental ramifications of volcanic eruptions, a topic well-documented in open-source materials due to its frequent occurrence as a geological hazard. In this case, with the exception of the AI Text Classifier, all other platforms successfully identified the artificially generated text.

In a final attempt, we prompted ChatGPT to craft an emotionally compelling narrative with a surprising twist ending. The objective was to assess the device’s capability to generate content with artistic merit. Intriguingly, three out of four detectors were unable to anticipate that the material was generated by AI.

Detection technology has been introduced as a means to mitigate the negative impacts of AI-generated content. Numerous companies behind AI detectors acknowledge the imperfections of their tools and caution against engaging in a technological arms race. These AI-detection firms emphasize that their services are intended to foster transparency and accountability by identifying and flagging instances of misinformation, fraud, non-consensual pornography, artistic plagiarism, and other forms of abuse of the technology.

Two distinct reports were published by NewsGuard, a company specializing in online misinformation monitoring, and ShadowDragon, a firm offering resources and training for digital investigation. According to these reports, numerous fringe news websites, content farms, and fake reviewers are leveraging artificial intelligence to produce deceptive content online. NewsGuard identified 125 websites spanning various categories such as news and lifestyle reporting, published in 10 different languages, where content was predominantly or entirely generated using AI tools.